- Home

- Janelle Shane

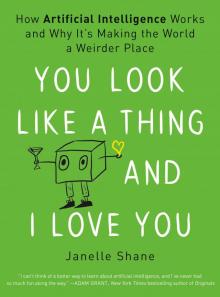

You Look Like a Thing and I Love You

You Look Like a Thing and I Love You Read online

Copyright

Copyright © 2019 by Janelle Shane

Cover design by Kapo Ng; cover art by Kapo Ng and Janelle Shane

Cover copyright © 2019 by Hachette Book Group, Inc.

Hachette Book Group supports the right to free expression and the value of copyright. The purpose of copyright is to encourage writers and artists to produce the creative works that enrich our culture.

The scanning, uploading, and distribution of this book without permission is a theft of the author’s intellectual property. If you would like permission to use material from the book (other than for review purposes), please contact [email protected]. Thank you for your support of the author’s rights.

Voracious / Little, Brown and Company

Hachette Book Group

1290 Avenue of the Americas, New York, NY 10104

littlebrown.com

twitter.com/littlebrown

facebook.com/littlebrownandcompany

First ebook edition: November 2019

Voracious is an imprint of Little, Brown and Company, a division of Hachette Book Group, Inc. The Voracious name and logo are trademarks of Hachette Book Group, Inc.

The publisher is not responsible for websites (or their content) that are not owned by the publisher.

The Hachette Speakers Bureau provides a wide range of authors for speaking events. To find out more, go to hachettespeakersbureau.com or call (866) 376-6591.

All images created by the author, except the following: GANcats image here made available under Creative Commons BY-NC 4.0 license by NVIDIA Corporation; used here with permission. Quickdraw kangaroos images here made available under Creative Commons BY-4.0 license by Google. School plan images here © by Joel Simon; used with permission. Submarine images here © by Danny Karmon, Yoav Goldberg, and Daniel Zoran; used with permission. Skiers image here © by Andrew Ilyas, Logan Engstrom, Anish Athalye, and Jessy Lin; used with permission.

ISBN 978-0-316-52523-7

E3-20190927-JV-NF-ORI

Contents

Cover

Title Page

Copyright

Dedication

INTRODUCTION:

AI is everywhere

CHAPTER 1:

What is AI?

CHAPTER 2:

AI is everywhere, but where is it exactly?

CHAPTER 3:

How does it actually learn?

CHAPTER 4:

It’s trying!

CHAPTER 5:

What are you really asking for?

CHAPTER 6:

Hacking the Matrix, or AI finds a way

CHAPTER 7:

Unfortunate shortcuts

CHAPTER 8:

Is an AI brain like a human brain?

CHAPTER 9:

Human bots (where can you not expect to see AI?)

CHAPTER 10:

A human-AI partnership

CONCLUSION:

Life among our artificial friends

Acknowledgments

Discover More

About the Author

Notes

To my blog readers, who laughed at all the silliness, drew the weird creatures, spotted all the giraffes, and baked the neural net–generated cookies. Thank you for putting up with the horseradish brownies.

To my family, for being my biggest fans.

Explore book giveaways, sneak peeks, deals, and more.

Tap here to learn more.

INTRODUCTION

AI is everywhere

Teaching an AI to flirt wasn’t really my kind of project.

To be sure, I’d done a lot of weird AI projects already. On my blog, AI Weirdness, I’d trained an AI to come up with new names for cats—Mr. Tinkles and Retchion were some of its less successful ones—and asked an AI to generate new recipes, some of which would sometimes call for “peeled rosemary” or handfuls of broken glass. But teaching an AI to sweet-talk humans was something else entirely.

AI learns by example—in this case studying a list of existing pickup lines and using them to generate new ones. The problem: the training dataset on my computer screen was a list of pickup lines that I had collected from various internet sources, all of which were awful. They ranged from cheesy bad puns all the way to rude innuendo. Once I’d trained an AI to imitate them, it would be able to produce thousands more at the push of a button. And, like an impressionable child, it wouldn’t know what it should and shouldn’t imitate. The AI would start with a blank slate, knowing nothing about what pickup lines are (or even what English is), and would learn from the examples, doing its best to imitate all the patterns it found. Including the grossness. It wouldn’t know any better.

I considered giving up on the project, but I had a blog post to write, and I had just spent an inconvenient amount of time collecting the example pickup lines. So I started the training. The AI began to look for patterns in the examples, inventing and testing rules that would help it predict which letters should appear in which order in a pickup line. Finally, training was over. With some trepidation, I asked the AI for some lines:

You must be a tringle? Cause you’re the only thing here.

Hey baby, you’re to be a key? Because I can bear your toot?

Are you a candle? Because you’re so hot of the looks with you.

You’re so beautiful that you say a bat on me and baby.

You look like a thing and I love you.

I was surprised and delighted. The AI’s virtual brain (about the same complexity as a worm’s) wasn’t capable of picking up the subtleties of the dataset, including misogyny or cheesiness. It did its best with the patterns it had managed to glean… and arrived at a different, arguably better, solution to the problem of making a stranger smile.

Though to me its lines were a resounding success, the cluelessness of my AI partner may come as a surprise if your knowledge of AI comes from reading news headlines or science fiction. It’s common to see companies claim that AIs are capable of judging the nuances of human language as well as or better than humans can, or that AIs will soon be able to replace humans in most jobs. AI will soon be everywhere, the press releases claim. And they’re both right—and very wrong.

In fact, AI is already everywhere. It shapes your online experience, determining the ads you see and suggesting videos while detecting social media bots and malicious websites. Companies use AI-powered resume scanners to decide which candidates to interview, and they use AI to decide who should be approved for a loan. The AIs in self-driving cars have already driven millions of miles (with the occasional human rescue during moments of confusion). We’ve also put AI to work in our smartphones, recognizing our voice commands, autotagging faces in our photos, and even applying a video filter that makes it look like we have awesome bunny ears.

But we also know from experience that everyday AI is not flawless, not by a long shot. Ad delivery haunts our browsers with endless ads for boots we already bought. Spam filters let the occasional obvious scam through or filter out a crucial email at the most inopportune time.

As more of our daily lives are governed by algorithms, the quirks of AI are beginning to have consequences far beyond the merely inconvenient. Recommendation algorithms embedded in YouTube point people toward ever more polarizing content, traveling in a few short clicks from mainstream news to videos by hate groups and conspiracy theorists.1 The algorithms that make decisions about parole, loans, and resume screening are not impartial but can be just as prejudiced as the humans they’re supposed to replace—sometimes even more so. AI-powered surveillance can’t be bribed, but it also can’t raise moral objections to anything it’s asked to do. It can also make mistakes when it’s m

isused—or even when it’s hacked. Researchers have discovered that something as seemingly insignificant as a small sticker can make an image recognition AI think a gun is a toaster, and a low-security fingerprint reader can be fooled more than 77 percent of the time with a single master fingerprint.

People often sell AI as more capable than it actually is, claiming that their AI can do things that are solidly in the realm of science fiction. Others advertise their AI as impartial even while its behavior is measurably biased. And often what people claim as AI performance is actually the work of humans behind the curtain. As consumers and citizens of this planet, we need to avoid being duped. We need to understand how our data is being used and understand what the AI we’re using really is—and isn’t.

On AI Weirdness, I spend my time doing fun experiments with AI. Sometimes this means giving AIs unusual things to imitate—like those pickup lines. Other times, I see if I can take them out of their comfort zones—like the time I showed an image recognition algorithm a picture of Darth Vader and simply asked it what it saw: it declared that Darth Vader was a tree and then proceeded to argue with me about it. From my experiments, I’ve found that even the most straightforward task can cause an AI to fail, as if you’d played a practical joke on it. But it turns out that pranking an AI—giving it a task and watching it flail—is a great way to learn about it.

In fact, as we’ll see in this book, the inner workings of AI algorithms are often so strange and tangled that looking at an AI’s output can be one of the only tools we have for discovering what it understood and what it got terribly wrong. When you ask an AI to draw a cat or write a joke, its mistakes are the same sorts of mistakes it makes when processing fingerprints or sorting medical images, except it’s glaringly obvious that something’s gone wrong when the cat has six legs and the joke has no punchline. Plus, it’s really hilarious.

In the course of my attempts to take AIs out of their comfort zone and into ours, I’ve asked AIs to write the first line of a novel, recognize sheep in unusual places, write recipes, name guinea pigs, and generally be very weird. But from these experiments, you can learn a lot about what AI’s good at and what it struggles to do—and what it likely won’t be capable of doing in my lifetime or yours.

Here’s what I’ve learned:

The Five Principles of AI Weirdness:

• The danger of AI is not that it’s too smart but that it’s not smart enough.

• AI has the approximate brainpower of a worm.

• AI does not really understand the problem you want it to solve.

• But: AI will do exactly what you tell it to. Or at least it will try its best.

• And AI will take the path of least resistance.

So let’s enter the strange world of AI. We’ll learn what AI is—and what it isn’t. We’ll learn what it’s good at and where it’s doomed to fail. We’ll learn why the AIs of the future might look less like C-3PO than like a swarm of insects. We’ll learn why a self-driving car would be a terrible getaway vehicle during a zombie apocalypse. We’ll learn why you should never volunteer to test a sandwich-sorting AI, and we’ll encounter walking AIs that would rather do anything but walk. And through it all we’ll learn how AI works, how it thinks, and why it’s making the world a weirder place.

CHAPTER 1

What is AI?

If it seems like AI is everywhere, it’s partly because “artificial intelligence” means lots of things, depending on whether you’re reading science fiction or selling a new app or doing academic research. When someone says they have an AI-powered chatbot, should we expect it to have opinions and feelings like the fictional C-3PO? Or is it just an algorithm that learned to guess how humans are likely to respond to a given phrase? Or a spreadsheet that matches words in your question with a library of preformulated answers? Or an underpaid human who types all the answers from some remote location? Or—even—a completely scripted conversation where human and AI are reading human-written lines like characters in a play? Confusingly, at various times, all these have been referred to as AI.

For the purposes of this book, I’ll use the term AI the way it’s mostly used by programmers today: to refer to a particular style of computer program called a machine learning algorithm. This chart shows a bunch of the terms I’ll be covering in this book and where they fall according to this definition.

Everything that I’m calling “AI” in this book is also a machine learning algorithm—let’s talk about what that is.

KNOCK, KNOCK, WHO’S THERE?

To spot an AI in the wild, it’s important to know the difference between machine learning algorithms (what we’re calling AI in this book) and traditional (what programmers call rules-based) programs. If you’ve ever done basic programming, or even used HTML to design a website, you’ve used a rules-based program. You create a list of commands, or rules, in a language the computer can understand, and the computer does exactly what you say. To solve a problem with a rules-based program, you have to know every step required to complete the program’s task and how to describe each one of those steps.

But a machine learning algorithm figures out the rules for itself via trial and error, gauging its success on goals the programmer has specified. The goal could be a list of examples to imitate, a game score to increase, or anything else. As the AI tries to reach this goal, it can discover rules and correlations that the programmer didn’t even know existed. Programming an AI is almost more like teaching a child than programming a computer.

Rules-based programming

Let’s say I wanted to use the more familiar rules-based programming to teach a computer to tell knock-knock jokes. The first thing I’d do is figure out all the rules. I’d analyze the structure of knock-knock jokes and discover that there’s an underlying formula, as follows:

Knock, knock.

Who’s there?

[Name]

[Name] who?

[Name] [Punchline]

Once I set this formula in stone, there are only two slots free that the program can control: [Name] and [Punchline]. Now the problem is reduced to just generating these two items. But I still need rules for generating them.

I could set up a list of valid names and a list of valid punchlines, as follows:

Names: Lettuce

Punchlines: in, it’s cold out here!

Names: Harry

Punchlines: up, it’s cold out here!

Names: Dozen

Punchlines: anybody want to let me in?

Names: Orange

Punchlines: you going to let me in?

Now the computer can produce knock-knock jokes by choosing a name–punchline pair from the list and slotting it into the template. This doesn’t create new knock-knock jokes but only gives me jokes I already know. I might try making things interesting by allowing [it’s cold out here!] to be replaced with a few different phrases: [I’m being attacked by eels!] and [lest I transform into an unspeakable eldritch horror]. Then the program can generate a new joke:

Knock, knock.

Who’s there?

Harry.

Harry who?

Harry up, I’m being attacked by eels!

I could replace [eels] with [an angry bee] or [a manta ray] or any number of things. Then I can get the computer to generate even more new jokes. With enough rules, I could potentially generate hundreds of jokes.

Depending on the level of sophistication I’m going for, I could spend a lot of time coming up with more advanced rules. I could find a list of existing puns and figure out a way to transform them into punchline format. I could even try programming in pronunciation rules, rhymes, semihomophones, cultural references, and so forth in an attempt to get the computer to recombine them into interesting puns. If I’m clever about it, I can get the program to generate new puns that nobody’s ever thought of. (Although one person who tried this discovered that the algorithm’s list of sayings contained words and phrases that were so old or obscure that almost nobody coul

d understand its jokes.) No matter how sophisticated my joke-making rules get, though, I’m still telling the computer exactly how to solve the problem.

Training AI

But when I train AI to tell knock-knock jokes, I don’t make the rules. The AI has to figure out those rules on its own.

The only thing I give it is a set of existing knock-knock jokes and instructions that are essentially, “Here are some jokes; try to make more of these.” And the materials I give it to work with? A bucket of random letters and punctuation.

Then I leave to get coffee.

The AI gets to work.

The first thing it does is try to guess a few letters of a few knock-knock jokes. It’s guessing 100 percent randomly at this point, so this first guess could be anything. Let’s say it guesses something like “qasdnw,m sne?mso d.” As far is it knows, this is how you tell a knock-knock joke.

Then the AI looks at what those knock-knock jokes are actually supposed to be. Chances are it’s very wrong. “All right,” says the AI, and it subtly adjusts its own structure so that it will guess a little more accurately next time. There’s a limit to how drastically it can change itself, because we don’t want it to try to memorize every new chunk of text it sees. But with a minimum of tweaking, the AI can discover that if it guesses nothing but k’s and spaces, it will at least be right some of the time. After looking at one batch of knock-knock jokes and making one round of corrections, its idea of a knock-knock joke looks something like this:

You Look Like a Thing and I Love You

You Look Like a Thing and I Love You